Microsoft DP-203 Übungsprüfungen

Zuletzt aktualisiert am 07.01.2026- Prüfungscode: DP-203

- Prüfungsname: Data Engineering on Microsoft Azure

- Zertifizierungsanbieter: Microsoft

- Zuletzt aktualisiert am: 07.01.2026

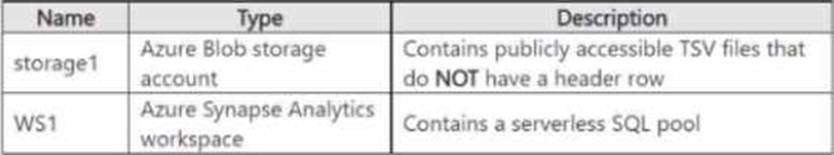

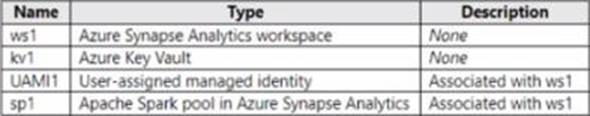

You have an Azure subscription that contains the resources shown in the following table.

You need to read the TSV files by using ad-hoc queries and the openrowset function. The solution must assign a name and override the inferred data type of each column.

What should you include in the openrowset function?

- A . the with clause

- B . the rowsetoptions bulk option

- C . the datafiletype bulk option

- D . the DATA_source parameter

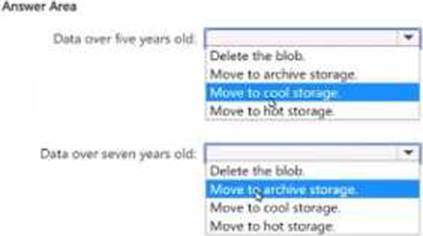

HOTSPOT

A company uses the Azure Data Lake Storage Gen2 service.

You need to design a data archiving solution that meets the following requirements:

Data that is older than five years is accessed infrequency but must be available within one second when requested.

Data that is older than seven years in NOT accessed.

Costs must be minimized while maintaining the required availability.

How should you manage the data? To answer, select the appropriate option in he answers area. NOTE: Each correct selection is worth one point.

You have an Azure subscription that contains a Microsoft Purview account.

You need to search the Microsoft Purview Data Catalog to identify assets that have an assetType property of Table or View

Which query should you run?

- A . assetType IN (Table‘, ‚View‘)

- B . assetType:Table OR assetType:View

- C . assetType – (Table or view)

- D . assetType:(Table OR View)

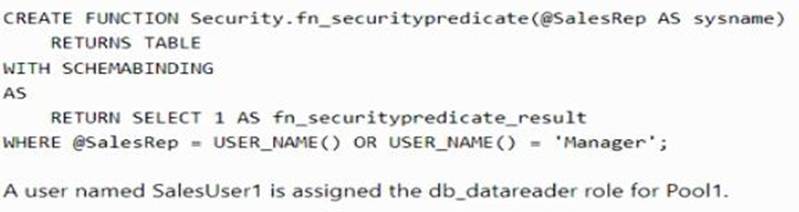

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 that contains a table named Sales.

Sales has row-level security (RLS) applied. RLS uses the following predicate filter.

A user named SalesUser1 is assigned the db_datareader role for Pool1.

Which rows in the Sales table are returned when SalesUser1 queries the table?

- A . only the rows for which the value in the User_Name column is SalesUser1

- B . all the rows

- C . only the rows for which the value in the SalesRep column is Manager

- D . only the rows for which the value in the SalesRep column is SalesUser1

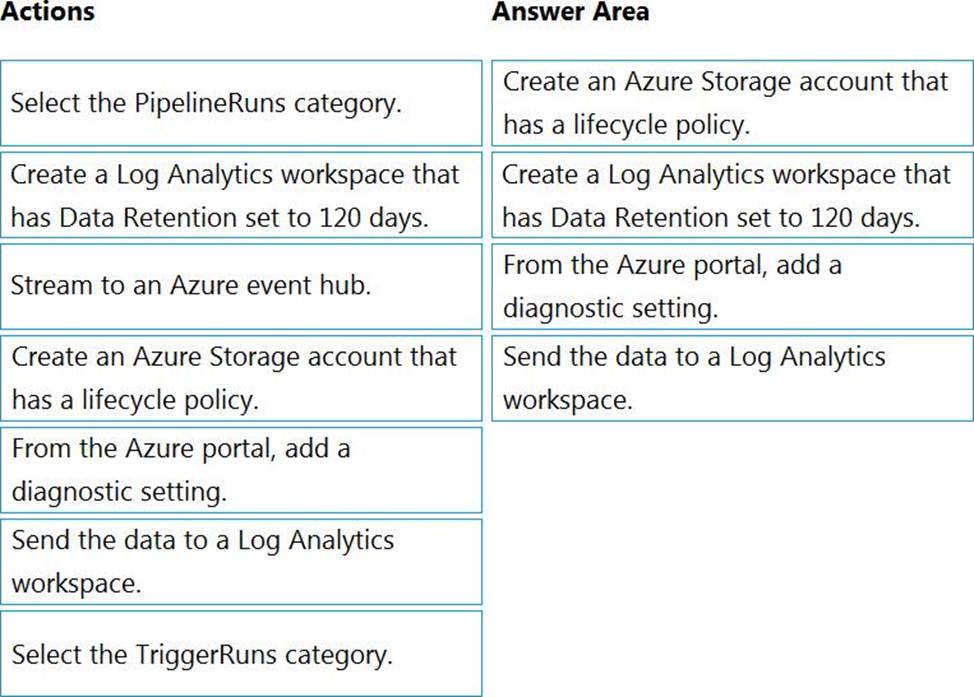

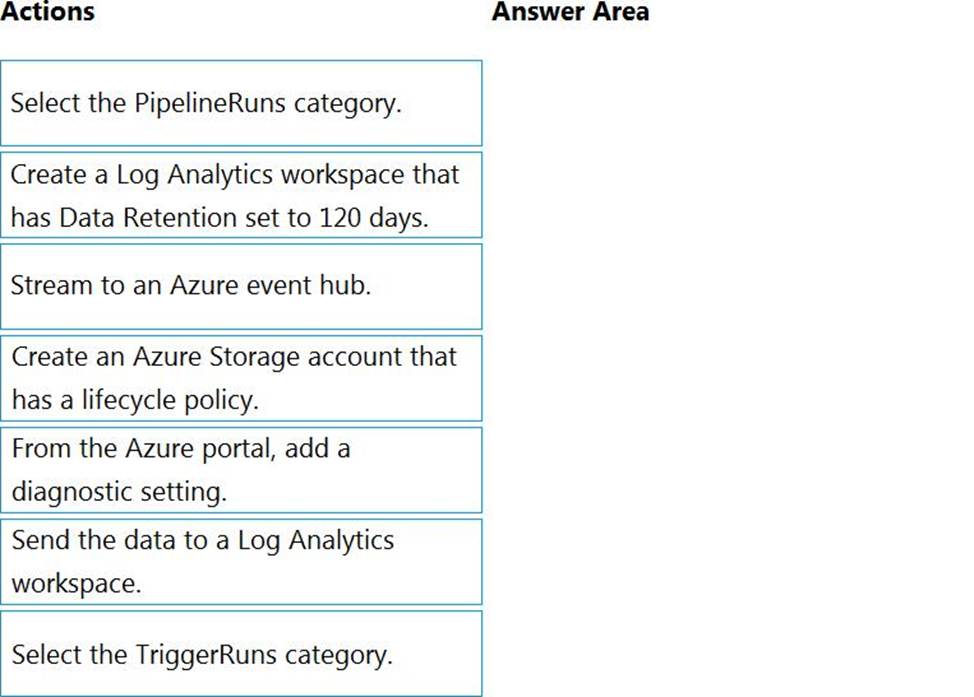

DRAG DROP

You have an Azure data factory.

You need to ensure that pipeline-run data is retained for 120 days. The solution must ensure that you can query the data by using the Kusto query language.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

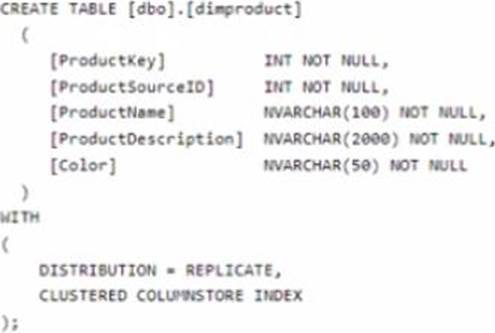

You are implementing a star schema in an Azure Synapse Analytics dedicated SQL pool.

You plan to create a table named DimProduct.

DimProduct must be a Type 3 slowly changing dimension (SCO) table that meets the following requirements:

• The values in two columns named ProductKey and ProductSourceID will remain the same.

• The values in three columns named ProductName, ProductDescription, and Color can change.

You need to add additional columns to complete the following table definition.

A)

![]()

B)

![]()

C)

![]()

D)

![]()

E)

![]()

F)

![]()

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

- F . Option F

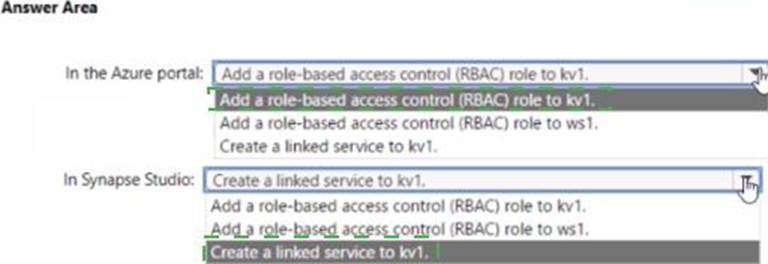

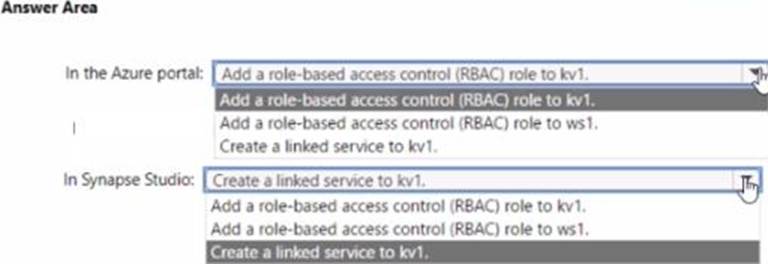

HOTSPOT

You have an Azure subscription that contains the resources shown in the following table.

You need to ensure that you can Spark notebooks in ws1. The solution must ensure secrets from kv1 by using UAMI1.

What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

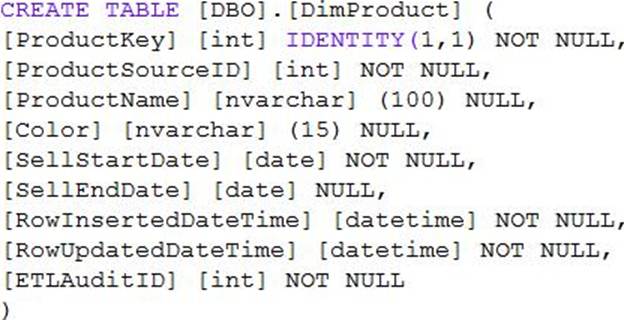

You need to implement a Type 3 slowly changing dimension (SCD) for product category data in an Azure Synapse Analytics dedicated SQL pool.

You have a table that was created by using the following Transact-SQL statement.

Which two columns should you add to the table? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . [EffectiveScarcDate] [datetime] NOT NULL,

- B . [CurrentProduccCacegory] [nvarchar] (100) NOT NULL,

- C . [EffectiveEndDace] [dacecime] NULL,

- D . [ProductCategory] [nvarchar] (100) NOT NULL,

- E . [OriginalProduccCacegory] [nvarchar] (100) NOT NULL,

You have an Azure subscription that contains an Azure Data Factory data pipeline named Pipeline1, a Log Analytics workspace named LA1, and a storage account named account1.

You need to retain pipeline-run data for 90 days.

The solution must meet the following requirements:

• The pipeline-run data must be removed automatically after 90 days.

• Ongoing costs must be minimized.

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Configure Pipeline1 to send logs to LA1.

- B . From the Diagnostic settings (classic) settings of account1. set the retention period to 90 days.

- C . Configure Pipeline1 to send logs to account1.

- D . From the Data Retention settings of LA1, set the data retention period to 90 days.

You have an Azure data factory.

You need to examine the pipeline failures from the last 60 days.

What should you use?

- A . the Activity log blade for the Data Factory resource

- B . the Monitor & Manage app in Data Factory

- C . the Resource health blade for the Data Factory resource

- D . Azure Monitor